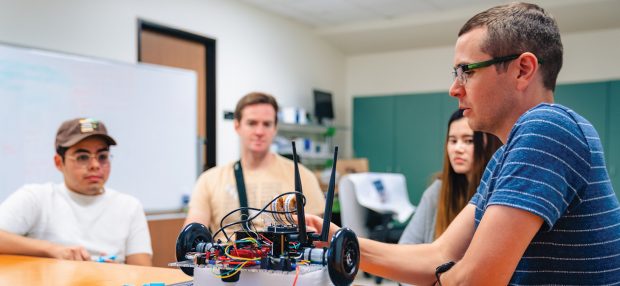

Kenneth Gonzalez ’24, Simon Heck ’22 and Liz Johnson ’24 work with Anthony Clark, assistant professor of computer science.

Someday, when a storm downs trees and power lines on campus or elsewhere, emergency workers may turn to autonomous robots for help with immediate surveillance.

“Maybe you want a robot to roam around campus, because it’s safer for them than for a human,” says Anthony Clark, assistant professor of computer science. “Maybe you have 10 robots that can take pictures and report back, ‘Hey, there’s a tree down here, a limb fallen there, this looks like a power line that’s down,’” he says, and technicians can be dispatched immediately to the correct location.

That day may not be too far off, thanks to research being conducted by Clark and three Pomona computer science majors. Right now they are working on computer simulations, exploring how to train autonomous robots to navigate the campus using machine learning. By spring, they hope to test their methods in actual robots, prototypes of which are already under construction elsewhere in Clark’s lab.

The group scoured the campus last summer to find a building with an interior that would present challenges to the autonomous robots. They settled on the Oldenborg Center because it “was potentially confusing enough for a robot trying to drive around,” with one hallway, for instance, leading to stairs in one direction and a ramp in the other.

Machine learning, Clark explains, is a subset of artificial intelligence. “It is basically an automated system that makes some decisions, and those automated decisions are based on a bunch of training data.”

To generate the data, the team created an exquisitely detailed schematic of the Oldenborg interior, down to a water fountain in a hallway. Kenneth Gonzalez ’24 took 2,000 photos and used photogrammetry software to determine how many images the robot would need for correct decision-making. Liz Johnson ’24 created another model with the flexibility to change various elements—from carpet to wood or even grass on the floors, for example, or rocks on the ceiling. Simon Heck ’22 worked on the back-end coding.

“The reason why we want to modify the environment, like having different lighting and changing textures, is so the robot is able to generalize,” says Clark. “The dataset will have larger amounts of diverse environments. We don’t want it to get confused if it’s going down a hallway and all of a sudden there’s a new painting on the wall.”

Clark says that once the group has models that work in virtual environments and transfer well to the physical world, the team will make the tasks more challenging. One idea is to create autonomous robots that fly rather than roll. “It’s pretty much the same process,” Clark says, “but it’s a lot more complicated.”

The goal, Clark says, “is a better way to make machine learning models transfer to a real-world device. To me, that means it’s less likely to bump into walls, and it’s a lot safer and more energy efficient.”

What keeps him up at night is training a machine and then, for example, a person taller than those in the dataset enters the field. The robot mischaracterizes what they are and runs into them. “I’m hoping the big takeaway from this work is how do you automatically find things that you weren’t necessarily looking for?”